by Julian Barbour, College Farm, Banbury, UK.

Tim Koslowski, Perimeter Institute

Title: Shape dynamics

PDF of the talk (500k)

Audio [.wav 33MB], Audio [.aif 3MB].

I will attempt to give some conceptual background to the recent seminar by Tim Koslowski (pictured left) on Shape Dynamics and the technical possibilities that it may open up. Shape dynamics arises from a method, called best matching, by which motion and more generally change can be quantified. The method was first proposed in 1982, and its furthest development up to now is described here. I shall first describe a common alternative.

Newton’s Method of Defining Motion

Newton’s method, still present in many theoreticians’ intuition, takes space to be real like a perfectly smooth table top (suppressing one space dimension) that extends to infinity in all directions. Imagine three particles that in two instants form slightly different triangles (1 and 2). The three sides of each triangle define the relative configuration. Consider triangle 1. In Newtonian dynamics, you can locate and orient 1 however you like. Space being homogeneous and isotropic, all choices are on an equal footing. But 2 is a different relative configuration. Can one say how much each particle has moved? According to Newton, many different motions of the particles correspond to the same change of the relative configuration. Keeping the position of 1 fixed, one can place the centre of mass of 2, C2, anywhere; the orientation of 2 is also free. In three-dimensional space, three degrees of freedom correspond to the possible changes of the sides of the

triangle (relative data), three to the position of C2, and three to the orientation. The three relative data cannot be changed, but the choices made for the remainder are disturbingly arbitrary. In fact, Galilean relativity means

that the position of C2 is not critical. But the orientational data are crucial. Different choices for them put different angular momenta L into the system, and the resulting motions are very different. Two snapshots of relative configurations contain no information about L; you need three to get a handle on L. Now we consider the alternative.

Dynamics Based on Best Matching

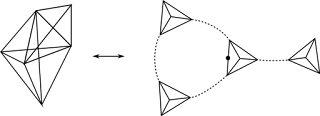

The definition of motion by best matching is illustrated in the figure. Dynamics based on it is more restrictive than Newtonian dynamics. The reason can be ‘read off’ from the figure. Best matching, as shown in b, does two things. It brings the centers of mass of the two triangles to a common point and sets their net relative rotation about it to zero. This last means that a dynamical system governed by best matching is always constrained, in Newtonian terms, to have vanishing total angular momentum L. In fact, the dynamical equations are Newtonian; the constraint L = 0 is maintained by them if it holds at any one instant.

Figure 1. The Definition of Motion by Best Matching. Three particles, at the vertices of the grey and dashed triangles at two instants, move relative to each other. The difference between the triangles is fact, but can one determine unique displacements of the particles? It seems not. Even if we hold the grey triangle fixed in space, we can place the dashed triangle relative to it in any arbitrary position, as in a. There seems to be no way to define unique displacements. However, we can bring the dashed triangle into the position b, in which it most nearly ‘covers’ the grey triangle. A natural minimizing procedure determines when ‘best matching’ is achieved. The displacements that take one from the grey to the dashed triangle are not defined relative to space but relative to the grey triangle. The procedure is reciprocal and must be applied to the complete dynamical system under consideration.

So far, we have not considered size. This is where Shape Dynamics proper begins. Size implies the existence of a scale to measure it by. But, if our three particles are the universe, where is a scale to measure its size? Size is another Newtonian absolute. Best matching can be extended to include adjustment of the relative sizes. This is done for particle dynamics here. It leads to a further constraint. Not only the angular momentum but also something called the dilatational momentum must vanish. The dynamics of any universe governed by best matching becomes even more restrictive than Newtonian dynamics.

Best Matching in the Theory of Gravity

Best matching can be applied to the dynamics of geometry and compared with Einstein's general relativity (GR), which was created as a description of the four-dimensional geometry of spacetime. However, it can be reformulated as a dynamical theory in which three-dimensional geometry (3-geometry) evolves. This was done in the late 1950s by Dirac and Arnowitt, Deser, and Misner (ADM), who found a particularly elegant way to do it that is now called the ADM formalism and is based on the Hamiltonian form of dynamics. In the ADM formalism, the diffeomorphism constraint, mentioned a few times by Tim Koslowski, plays a prominent role. Its presence can be explained by a sophisticated generalization of the particle best matching shown in the figure. This shows that the notion of change was radically modified when Einstein created GR (though this fact is rather well hidden in the spacetime formulation). The notion of change employed in GR means that it is background independent . In the ADM formalism as it stands, there is no constraint that corresponds to best matching with respect to size. However, in addition to the diffeomorphism constraint, or rather constraints as there are infinitely many of them, there are also infinitely many Hamiltonian constraints. They reflect the absence of an external time in Einstein's theory and the almost complete freedom to define simultaneity at spatially separated points in the universe. It has proved very difficult to take them into account in a quantum theory of gravity. Building on previous work, Tim and his collaborators Henrique Gomes and Sean Gryb have found an alternative Hamiltonian representation of dynamical geometry in which all but one of the Hamiltonian constraints can be swapped for conformal constraints. These conformal constraints arise from a best matching in which the volume of space can be adjusted with infinite flexibility. Imagine a balloon with curves drawn on it that form certain angles wherever they meet. One can imagine blowing up the balloon or letting it contract by different amounts everywhere on its surface. In this process, the angles at which the curves meet cannot change, but the distances between points can. This is called a conformal transformation and is clearly analogous to changing the overall size of figures in Euclidean space. The conformal transformations that Tim discusses in his talk are applied to curved 3-geometries that close up on themselves like the surface of the earth does in two dimensions. The alternative, or dual, representation of gravity through the introduction of conformal best matching seems to open up new routes to quantum gravity. At the moment, the most promising looks to be the symmetry doubling idea discussed by Tim. However, it is early days. There are plenty of possible obstacles to progress in this direction, as Tim is careful to emphasize. One of the things that intrigues me most about Shape Dynamics is that, if we are to explain the key facts of cosmology by a spatially closed expanding universe, we cannot allow completely unrestricted conformal transformations in the best matching but only the volume-preserving ones (VPCTs) that Tim discusses. This is a tiny restriction but strikes me as the very last vestige of Newton's absolute space. I think this might be telling us something fundamental about the quantum mechanics of the universe. Meanwhile it is very encouraging to see technical possibilities emerging in the new conceptual framework.

Thursday, December 15, 2011

Monday, October 31, 2011

Spin foams from arbitrary surfaces

by Frank Hellman, Albert Einstein Institute, Golm, Germany

Jacek Puchta, University of Warszaw

Title: The Feynman diagramatics for the spin foam models

PDF of the talk (3MB)

Audio [.wav 35MB], Audio [.aif 3MB].

In several previous blog posts (e.g. here) the spin foam approach to quantum gravity dynamics was introduced. To briefly summarize, this approach describes the evolution of a spin-network via a

2-dimensional surface that we can think of as representing how the network changes through time.

While this picture is intuitively compelling, at the technical level there have always been differences of opinions on what type of 2-dimensional surfaces should occur in this evolution. This question is particularly critical once we start trying to sum over all different type of surfaces. The original proposal for this 2-dimensional surface approach was due to Ooguri, who allowed only a very restricted set of surfaces, namely those called "dual to triangulations of manifolds".

A triangulation is a decomposition of a manifold into simplices. The simplices in successive dimensions are obtained by adding a point and "filling in". The 0-dimensional simplex is just a single point. For the 1-dimensional simplex we add a second point and fill in the line between them. For 2-dimensions we add a third point, fill in the space between the line and the third point, and obtain a triangle. In 3-d we get a tetrahedron, and in 4-d what is called a 4-simplex.

The surface "dual to a triangulation" is obtained by putting a vertex in the middle of the highest dimensional simplex, then connecting these by an edge for every simplex one dimension lower, and to fill in surfaces for every simplex two dimensions lower. An example for the case where the highest dimensional simplex is a triangle is given in the figure, there the vertex abc is in the middle of the triangle ABC, and connected by the dashed lines indicating edges, to the neighboring vertices.

All current spin foam models were created with such triangulations in mind. In fact many of the crucial results of the spin foam approach rely explicitly on this feature rather technical point.

The price we pay for restricting ourselves to such surfaces is that we do not address the dynamics of the full Loop Quantum Gravity Hilbert space. The spin networks we evolve will always be 4-valent, that is, there are always four links coming into every node, whereas in the LQG Hilbert space we have spin-networks of arbitrary valence. Another issue is that we might wish to study the dynamics of the model using the simplest surfaces first to get a feeling for what to expect from the theory, and for some interesting examples, like spin foam cosmology, the triangulation based surfaces are immediately quite complicated.

The group of Jerzy Lewandowski therefore suggested to generalize the amplitudes considered so far to fairly arbitrary surfaces, and gave a method for constructing the spin foam models, considered before in the triangulation context only, on these arbitrary surfaces. This patches one of the holes between the LQG kinematics and the spin foam dynamics. The price is that many of the geometricity results from before no longer hold.

Furthermore it now becomes necessary to effectively handle these general surfaces. A priori a lot of those exist, and it can be very hard to imagine them. In fact the early work on spin foam cosmology overlooked a large number of surfaces that potentially contribute to the amplitude. The work Jacek Puchta presented in this talk solves this issue very elegantly by developing a simple diagrammatic language that allows us to very easily work with these surfaces without having to imagine them.

This is done by describing every node in the amplitude through a network, and then giving additional information that allows us to reconstruct a surface from these networks. Without going into the full details, consider a picture like in the next figure. The solid lines on the right hand side are the networks we consider, the dashed lines are additional data. Each node of the solid lines represents a triangle, every solid line is two triangles glued along an edge, and every dashed line is two triangles glued face to face. Following this prescription we obtain the triangulation on the left. While the triangulation generated by this prescription can be tricky to visualize in general, it is easy to work directly with the networks of dashed and solid lines. Furthermore we don't need to restrict ourselves to networks that generate triangulations anymore but can consider much more general cases.

This language has a number of very interesting features. First of all these networks immediately give us the spin-networks we need to evaluate to obtain the spin foam amplitude of the surface reconstructed from them.

Furthermore it is very easy to read off what the boundary spin network of a particular surface is. As a strong demonstration of how this language simplifies thinking about surfaces, he demonstrated how all surfaces relevant for the spin foam cosmology context, which were long overlooked, are easily seen and enumerated using the new language.

The challenge ahead is to understand whether the results obtained in the simplicial setting can be translated into the more general setting at hand. For the geometricity results this looks very challenging. But in any case, the new language looks like it is going to be an indispensable tool for studying spin foams going forward, and for clarifying the link between the canonical LQG approach and the covariant spin foams.

Jacek Puchta, University of Warszaw

Title: The Feynman diagramatics for the spin foam models

PDF of the talk (3MB)

Audio [.wav 35MB], Audio [.aif 3MB].

In several previous blog posts (e.g. here) the spin foam approach to quantum gravity dynamics was introduced. To briefly summarize, this approach describes the evolution of a spin-network via a

2-dimensional surface that we can think of as representing how the network changes through time.

While this picture is intuitively compelling, at the technical level there have always been differences of opinions on what type of 2-dimensional surfaces should occur in this evolution. This question is particularly critical once we start trying to sum over all different type of surfaces. The original proposal for this 2-dimensional surface approach was due to Ooguri, who allowed only a very restricted set of surfaces, namely those called "dual to triangulations of manifolds".

A triangulation is a decomposition of a manifold into simplices. The simplices in successive dimensions are obtained by adding a point and "filling in". The 0-dimensional simplex is just a single point. For the 1-dimensional simplex we add a second point and fill in the line between them. For 2-dimensions we add a third point, fill in the space between the line and the third point, and obtain a triangle. In 3-d we get a tetrahedron, and in 4-d what is called a 4-simplex.

The surface "dual to a triangulation" is obtained by putting a vertex in the middle of the highest dimensional simplex, then connecting these by an edge for every simplex one dimension lower, and to fill in surfaces for every simplex two dimensions lower. An example for the case where the highest dimensional simplex is a triangle is given in the figure, there the vertex abc is in the middle of the triangle ABC, and connected by the dashed lines indicating edges, to the neighboring vertices.

All current spin foam models were created with such triangulations in mind. In fact many of the crucial results of the spin foam approach rely explicitly on this feature rather technical point.

The price we pay for restricting ourselves to such surfaces is that we do not address the dynamics of the full Loop Quantum Gravity Hilbert space. The spin networks we evolve will always be 4-valent, that is, there are always four links coming into every node, whereas in the LQG Hilbert space we have spin-networks of arbitrary valence. Another issue is that we might wish to study the dynamics of the model using the simplest surfaces first to get a feeling for what to expect from the theory, and for some interesting examples, like spin foam cosmology, the triangulation based surfaces are immediately quite complicated.

The group of Jerzy Lewandowski therefore suggested to generalize the amplitudes considered so far to fairly arbitrary surfaces, and gave a method for constructing the spin foam models, considered before in the triangulation context only, on these arbitrary surfaces. This patches one of the holes between the LQG kinematics and the spin foam dynamics. The price is that many of the geometricity results from before no longer hold.

Furthermore it now becomes necessary to effectively handle these general surfaces. A priori a lot of those exist, and it can be very hard to imagine them. In fact the early work on spin foam cosmology overlooked a large number of surfaces that potentially contribute to the amplitude. The work Jacek Puchta presented in this talk solves this issue very elegantly by developing a simple diagrammatic language that allows us to very easily work with these surfaces without having to imagine them.

This is done by describing every node in the amplitude through a network, and then giving additional information that allows us to reconstruct a surface from these networks. Without going into the full details, consider a picture like in the next figure. The solid lines on the right hand side are the networks we consider, the dashed lines are additional data. Each node of the solid lines represents a triangle, every solid line is two triangles glued along an edge, and every dashed line is two triangles glued face to face. Following this prescription we obtain the triangulation on the left. While the triangulation generated by this prescription can be tricky to visualize in general, it is easy to work directly with the networks of dashed and solid lines. Furthermore we don't need to restrict ourselves to networks that generate triangulations anymore but can consider much more general cases.

This language has a number of very interesting features. First of all these networks immediately give us the spin-networks we need to evaluate to obtain the spin foam amplitude of the surface reconstructed from them.

Furthermore it is very easy to read off what the boundary spin network of a particular surface is. As a strong demonstration of how this language simplifies thinking about surfaces, he demonstrated how all surfaces relevant for the spin foam cosmology context, which were long overlooked, are easily seen and enumerated using the new language.

The challenge ahead is to understand whether the results obtained in the simplicial setting can be translated into the more general setting at hand. For the geometricity results this looks very challenging. But in any case, the new language looks like it is going to be an indispensable tool for studying spin foams going forward, and for clarifying the link between the canonical LQG approach and the covariant spin foams.

Saturday, October 1, 2011

The Immirzi parameter in spin foam quantum gravity

by Sergei Alexandrov, Universite Montpellier, France.

James Ryan, Albert Einstein Institute

Title: Simplicity constraints and the role of the Immirzi parameter in quantum gravity

PDF of the talk (11MB)

Audio [.wav 19MB], Audio [.aif 2MB].

Spin foam quantization is an approach to quantum gravity. Firstly, it is a "covariant" quantization, in that it does not break space-time into space and time as "canonical" loop quantum gravity (LQG) does. Secondly, it is "discrete" in that it assumes at the outset that space-time has a granular rather than a smooth structure assumed by "continuum" theories such as LQG. Finally, it is based on the "path integral" approach to quantization that Feynman introduced in which one sums probabilities for all possible trajectories in a system. In the case of gravity one assigns probabilities to all possible space-times.

To write the path integral in this approach one uses a reformulation of Einstein's general relativity due to Plebanski. Also, one examines this reformulation for discrete space-times. From the early days it was considered as a very close cousin of loop quantum gravity because both approaches lead to the same qualitative picture of quantum space-time. (Remarkably, although one starts with smooth space and time in LQG, after quantization a granular structure emerges.) However, at the quantitative level, for long time there was a striking disagreement. First of all, there were the symmetries. On the one hand, LQG involves a set of symmetries known technically as the SU(2) group, while on the other, spin foam models had symmetries either associated with the SO(4) group or the Lorentz group. The latter are symmetries that emerge in space-time whereas the SU(2) symmetry emerges naturally in space. It is not surprising that working in a covariant approach the symmetries that emerge naturally are those of space-time whereas working in an approach where space is distinguished like in the canonical approach one gets symmetries associated with space. The second difference concerns the famous Immirzi parameter which plays an extremely important role in LQG, but was not even included in the spin foam approach. This is a parameter that appears in the classical formulation that has no observable consequences there (it amounts to a change of variables). On LQG quantization, however, physical predictions depend on it, in particular the value of the quantum of area and the entropy of black holes.

The situation has changed a few years ago with the appearance of two new spin foam models due to Engle-Pereira-Rovelli-Livine (EPRL) and Freidel-Krasnov (FK). The new models appear to agree with LQG at the kinematical level (i.e. they have similar state spaces, although their specific dynamics may differ). Moreover, they incorporate the Immirzi parameter in a non-trivial way.

The basic idea behind these models is the following: in the Plebanski formulation general relativity is represented as a topological BF theory supplemented by certain constraints ("simplicity constraints"). BF theories are well studied topological theories (their dynamics are very simple, being limited to global properties). This straightforwardness in particular implies that it is well known how to discretize and to quantize BF theories (using, for example, the spin foam approach). The fact that general relativity can be thought of as a BF theory with additional constraints gives rise to the idea that quantum gravity can be obtained by imposing the simplicity constraints directly at quantum level on a BF theory. For that purpose, using the standard quantization map of BF theories, the simplicity constraints become quantum operators acting on the BF states. The insight of EPRL was that, once the Immirzi parameter is included, some of the constraints should not be imposed as operator identities, but in a weaker form. This allows to find solutions of the quantum constraints which can be put into one-to-one correspondence with the kinematical states of LQG.

However, such quantization procedure does not take into account the fact that the simplicity constraints are not all the constraints of the theory. They should be supplemented by certain other ("secondary") constraints and together they form what is technically known as a system of second class constraints. These are very different from the usual kinds of constraints that appear in gauge theories. Whereas the latter correspond to the presence of symmetries in the theory, the former just freeze some degrees of freedom. In particular, at quantum level they should be treated in a completely different way. To implement second class constraints, one should either solve them explicitly, or use an elaborate procedure called the Dirac bracket. Unfortunately, in the spin foam approach the secondary constraints had been completely ignored so far.

At the classical level, if one takes all these constraints into account for continuum space-times, one gets a formulation which is independent of the Immirzi parameter. Such a canonical formulation can be used for a further quantization either by the loop or the spin foam method and leads to results which are still free from this dependence. This raises questions about the compatibility of the spin foam quantization with the standard Dirac quantization based on the continuum canonical analysis.

In this seminar James Ryan tried to shed light on this issue by studying a the canonical analysis of Plebanski formulation for discrete space-times. Namely, in his work with Bianca Dittrich, they analyzed constraints which must be imposed on the discrete BF theory to get a discretized geometry and how they affect the structure of the theory. They found that the necessary discrete constraints are in a nice correspondence with the primary and secondary simplicity constraints of the continuum theory.

Besides, it turned out that the independent constraints are naturally split into two sets. The first set expresses the equality of two sectors of the BF theory, which effectively reduces SO(4) gauge group to SU(2). And indeed, if one explicitly solves this set of constraints, one finds a space of states analogous to that of LQG and the new spin foam models dependent on the Immirzi parameter.

However, the corresponding geometries cannot be associated with piecewise flat geometries (geometries that are obtained by gluing flat simplices, just like one glues flat triangles to form a geodesic dome). These piecewise flat geometries are the geometries usually associated with spin foam models. Instead they produce the so called twisted geometries recently studied by Freidel and Speziale. To get the genuine discrete geometries appearing, for example, in the formulation of general relativity known as Regge calculus, one should impose an additional set of constraints given by certain gluing conditions. As Dittrich and Ryan succeeded in showing, the formulation obtained by taking into account all constraints is independent of the Immirzi parameter, as it is in the continuum classical formulation. This suggests that the quest for a consistent and physically acceptable spin foam model is far from being accomplished and that the final quantum theory might eventually be free from the Immirzi parameter.

James Ryan, Albert Einstein Institute

Title: Simplicity constraints and the role of the Immirzi parameter in quantum gravity

PDF of the talk (11MB)

Audio [.wav 19MB], Audio [.aif 2MB].

Spin foam quantization is an approach to quantum gravity. Firstly, it is a "covariant" quantization, in that it does not break space-time into space and time as "canonical" loop quantum gravity (LQG) does. Secondly, it is "discrete" in that it assumes at the outset that space-time has a granular rather than a smooth structure assumed by "continuum" theories such as LQG. Finally, it is based on the "path integral" approach to quantization that Feynman introduced in which one sums probabilities for all possible trajectories in a system. In the case of gravity one assigns probabilities to all possible space-times.

To write the path integral in this approach one uses a reformulation of Einstein's general relativity due to Plebanski. Also, one examines this reformulation for discrete space-times. From the early days it was considered as a very close cousin of loop quantum gravity because both approaches lead to the same qualitative picture of quantum space-time. (Remarkably, although one starts with smooth space and time in LQG, after quantization a granular structure emerges.) However, at the quantitative level, for long time there was a striking disagreement. First of all, there were the symmetries. On the one hand, LQG involves a set of symmetries known technically as the SU(2) group, while on the other, spin foam models had symmetries either associated with the SO(4) group or the Lorentz group. The latter are symmetries that emerge in space-time whereas the SU(2) symmetry emerges naturally in space. It is not surprising that working in a covariant approach the symmetries that emerge naturally are those of space-time whereas working in an approach where space is distinguished like in the canonical approach one gets symmetries associated with space. The second difference concerns the famous Immirzi parameter which plays an extremely important role in LQG, but was not even included in the spin foam approach. This is a parameter that appears in the classical formulation that has no observable consequences there (it amounts to a change of variables). On LQG quantization, however, physical predictions depend on it, in particular the value of the quantum of area and the entropy of black holes.

The situation has changed a few years ago with the appearance of two new spin foam models due to Engle-Pereira-Rovelli-Livine (EPRL) and Freidel-Krasnov (FK). The new models appear to agree with LQG at the kinematical level (i.e. they have similar state spaces, although their specific dynamics may differ). Moreover, they incorporate the Immirzi parameter in a non-trivial way.

The basic idea behind these models is the following: in the Plebanski formulation general relativity is represented as a topological BF theory supplemented by certain constraints ("simplicity constraints"). BF theories are well studied topological theories (their dynamics are very simple, being limited to global properties). This straightforwardness in particular implies that it is well known how to discretize and to quantize BF theories (using, for example, the spin foam approach). The fact that general relativity can be thought of as a BF theory with additional constraints gives rise to the idea that quantum gravity can be obtained by imposing the simplicity constraints directly at quantum level on a BF theory. For that purpose, using the standard quantization map of BF theories, the simplicity constraints become quantum operators acting on the BF states. The insight of EPRL was that, once the Immirzi parameter is included, some of the constraints should not be imposed as operator identities, but in a weaker form. This allows to find solutions of the quantum constraints which can be put into one-to-one correspondence with the kinematical states of LQG.

However, such quantization procedure does not take into account the fact that the simplicity constraints are not all the constraints of the theory. They should be supplemented by certain other ("secondary") constraints and together they form what is technically known as a system of second class constraints. These are very different from the usual kinds of constraints that appear in gauge theories. Whereas the latter correspond to the presence of symmetries in the theory, the former just freeze some degrees of freedom. In particular, at quantum level they should be treated in a completely different way. To implement second class constraints, one should either solve them explicitly, or use an elaborate procedure called the Dirac bracket. Unfortunately, in the spin foam approach the secondary constraints had been completely ignored so far.

At the classical level, if one takes all these constraints into account for continuum space-times, one gets a formulation which is independent of the Immirzi parameter. Such a canonical formulation can be used for a further quantization either by the loop or the spin foam method and leads to results which are still free from this dependence. This raises questions about the compatibility of the spin foam quantization with the standard Dirac quantization based on the continuum canonical analysis.

In this seminar James Ryan tried to shed light on this issue by studying a the canonical analysis of Plebanski formulation for discrete space-times. Namely, in his work with Bianca Dittrich, they analyzed constraints which must be imposed on the discrete BF theory to get a discretized geometry and how they affect the structure of the theory. They found that the necessary discrete constraints are in a nice correspondence with the primary and secondary simplicity constraints of the continuum theory.

Besides, it turned out that the independent constraints are naturally split into two sets. The first set expresses the equality of two sectors of the BF theory, which effectively reduces SO(4) gauge group to SU(2). And indeed, if one explicitly solves this set of constraints, one finds a space of states analogous to that of LQG and the new spin foam models dependent on the Immirzi parameter.

However, the corresponding geometries cannot be associated with piecewise flat geometries (geometries that are obtained by gluing flat simplices, just like one glues flat triangles to form a geodesic dome). These piecewise flat geometries are the geometries usually associated with spin foam models. Instead they produce the so called twisted geometries recently studied by Freidel and Speziale. To get the genuine discrete geometries appearing, for example, in the formulation of general relativity known as Regge calculus, one should impose an additional set of constraints given by certain gluing conditions. As Dittrich and Ryan succeeded in showing, the formulation obtained by taking into account all constraints is independent of the Immirzi parameter, as it is in the continuum classical formulation. This suggests that the quest for a consistent and physically acceptable spin foam model is far from being accomplished and that the final quantum theory might eventually be free from the Immirzi parameter.

Monday, September 12, 2011

What is hidden in an infinity?

by Daniele Oriti, Albert Einstein Institute, Golm, Germany

Matteo Smerlak, ENS Lyon

Title: Bubble divergences in state-sum models

PDF of the slides (180k)

Audio [.wav 25MB], Audio [.aif 5MB].

Physicists tend to dislike infinities. In particular, they take it very badly when the result of a calculation they are doing turns out to be not some number that they could compare with experiments, but infinite. No energy or distance, no velocity or density, nothing in the world around us has infinity as its measured value. Most times, such infinities signal that we have not been smart enough in dealing with the physical system we are considering, that we have missed some key ingredient in its description, or used the wrong mathematical language in describing it. And we do not like to be reminded of our own lack of cleverness.

At the same time, and as a confirmation of the above, much important progress in theoretical physics has come out of a successful intellectual fight with infinities. Examples abound, but here is a historic one. Consider a large 3-dimensional hollow spherical object whose inside is made of some opaque material (thus absorbing almost all the light hitting it), and assume that it is filled with light (electromagnetic radiation) maintained at constant temperature. This object is named a black body. Imagine now that the object has a small hole from which a limited amount of light can exit. If one computes the total energy (i.e. considering all possible frequencies) of the radiation exiting from the hole, at a given temperature and at any given time, using the well-established laws of classical electromagnetism and classical statistical mechanics, one finds that it is infinite. Roughly, this calculation looks as follows: you have to sum all the contributions to the total energy of the radiation emitted (at any given time), coming from all the infinite modes of oscillation of the radiation, at the temperature T. Since there are infinite modes, the sum diverges. Notice that the same calculation can be performed by first imagining that there exists a maximum possible mode of oscillation, and then studying what

happens when this supposed maximum is allowed to grow indefinitely. After the first step, the calculation gives a finite result, but the original divergence is obtained again after the second step. In any case, this sum gives a divergent result: infinity! However, this two-step procedure allows to understand better how the quantity of interest diverges.

Beside being a theoretical absurdity, this is simply false on experimental grounds since such radiating objects can be realized rather easily in a laboratory. This represented a big crisis in classical physics at the end of the 19th century. The solution came from Max Planck with the hypothesis that light is in reality constituted by discrete quanta (akin to matter particles), later named photons, with a consequently different formula for the emitted radiation from the hole (more precisely, for the individual contributions). This hypothesis, initially proposed for completely different motivations, not only solved the paradox of the infinite energy, but spurred the quantum mechanics revolution which led (after the work of Bohr, Einstein, Heisenberg, Schroedinger, and many others) to the modern understanding of light, atoms and all fundamental forces (except gravity).

We see, then, that the need to understand what was really lying inside an infinity, the need to confront it, led to an important jump forward in our understanding of Nature (in this example, of light), and to a revision of our most cherished assumptions about it. The infinity was telling us just that. Interestingly, a similar theoretical phenomenon seems now to suggest that another, maybe even greater jump forward is needed and a new understanding of gravity and of spacetime itself.

An object that is theoretically very close to a perfect black body is a black hole. Our current theory of matter, quantum field theory, in conjunction with our current theory of gravity, General Relativity, predicts that such black hole will emit thermal radiation at a constant temperature inversely proportional to the mass of the black hole. This is called Hawking radiation. This result, together with the description of black holes provided by general relativity, also suggest that black holes have an entropy associated to them, measuring the number of their intrinsic degrees of freedom. Because a black hole is nothing but a particular configuration of space, this entropy is then a measure of the intrinsic degrees of freedom of (a region of) space itself! However, first of all we have no real clue what these intrinsic degrees of freedom are; second, if the picture of space provided by general relativity is correct, their number and their corresponding entropy is infinite!

This fact, together with a large number of other results and conceptual puzzles, prompted a large part of the theoretical physics community to look for a better theory of space (and time), possibly based on quantum mechanics (taking on board the experience from history): a quantum theory of space-time, a quantum theory of gravity.

It should not be understood that the transition from classical to quantum mechanics led us away from the problem of infinities in physics. On the contrary, our best theories of matter and of fundamental forces, quantum field theories, are full of infinities and divergent quantities. What we have learned, however, from quantum field theories, is exactly how to deal with such infinities in rather general terms, what to expect, and what to do when such infinities present themselves. In particular, we have learned another crucial lesson about nature: physical phenomena look very different at different energy and distance scales, i.e. if we look at them very closely or if they involve higher and higher energies. The methods by which we deal with this scale dependence go under the name of renormalization group, now a crucial ingredient of all theories of particles and materials, both microscopic and macroscopic. How this scale dependence is realized in practice depends of course on the specific physical system considered.

Let us consider a simple example. Consider the dynamics of a hypothetical particle with mass m and no spin; assume that what can happen to this particle during its evolution is only one of the following two possibilities: it can either disintegrate into two new particles of the same type or disintegrate into three particles of the same type. Also, assume that the inverse processes are also allowed (that is, two particles can disappear and give rise to a single new one, and the same can do three particles). So there are two possible ‘interactions’ that this type of particle can undergo, two possible fundamental processes that can happen to it. To each of them, we associate a parameter, called a ‘coupling constant’ that indicates how strong each possible interaction process is (compared with each other and with other possible processes due for example to the interaction of the particles with gravity or with light etc), one for the process involving three particles, and one for the one involving four particles (this is counting incoming and outgoing particles). Now, the basic object that a quantum field theory allows us to compute is the probability (amplitude) that, if I first see a number n of particles at a certain time, at a later time I will instead see m particles, with m different from n (because some particle will have disintegrated and other will have been created). All the other quantities of physical interest can be obtained using these probabilities.

Moreover, the theory tells me exactly how this probability should be computed. It goes roughly as follows. First, I have to consider all possible processes leading from n particles to m particles, including those involving an infinite number of elementary creation/disintegration processes. These can be represented by graphs (called Feynman graphs) in which each vertex represents a possible elementary process (see the figure for an example of such process, made out of interactions involving three particles only, with associated graph,).

A graph describing a sequence of 3-valent elementary interactions for a point particle, with 2 particles measured both at the initial and at the final time (to be read from left to right)

Second, each of these processes should be assigned a probability (amplitude), that is, a function of the mass of the particle considered and of the ‘coupling constants’. Third, this amplitude is in turn a function of the energies of each particle involved in any given process.(and corresponding to a single line in the graph representing the process), and this energy can be anything, from zero to infinity. The theory tells me what form the probability amplitude has. Now the total probability for the measurement of n particle first and m particles later is computed by summing over all processes/graphs (including those composed of infinite elementary processes) and all the energies of particles involved in them, weighted by the probability amplitudes.

Now, guess what? The above calculation typically gives the always feared result: infinity. Basically, everything that could go wrong, actually goes wrong, as in Murphy’s law. Not only the sum over all the graphs/processes gives a divergent answer, but also the intermediate sum over energies diverges. However, as we anticipated, we now know how to deal with this kind of infinities, we are not scared anymore and, actually, we have learnt what they mean, physically. The problem mainly arises when we consider higher and higher energies for the particles involved in the process. For simplicity imagine that all the particles have the same energy E, and assume this can take any value from 0 to a maximum value Emax. Just like in the black body example, the existence of the maximum implies that the sum over energies is a finite number, so everything up to here goes fine. However, when we let the maximal energy becomes infinite, typically the same quantity becomes infinite.

We have done something wrong; let’s face it: there is something we have not understood of the physics of the system (simple particles as they may be). It could be that, as in the case of the blackbody radiation, we are missing something fundamental about the nature of these particles, and we have to change the whole probability amplitude. Maybe other type of particles have to be considered as created out of the initial ones. All this could be. However, what quantum field theory has taught us is that, before considering these more drastic possibilities, one should try to re-write the above calculation by considering coupling constants and mass, that themselves depend on the scale Emax, and then compute again the probability amplitude, but now using these ‘scale dependent’constants, and check if one can now consider the case of Emax growing up to infinity, i.e. consider arbitrary energies for the particles involved in the process. If this can be done, i.e. if one can find coupling constants dependent on the energies such that now the result of sending Emax to infinity, i.e. considering larger and larger energies, is a finite, sensible probability then there no need for further modifications of the theory, and the physical system considered, i.e. the (system of) particles, is under control.

What does all this teach us? It teaches us that the type of interactions that the system can

undergo and their relative strengths depend on the scale at which we look at the system, i.e. on what energy is involved in any process the system is experiencing. For example, it could happen that when Emax becomes higher and higher, the coupling constant as a function of Emax becomes zero. This would mean that, at very high energies, the process of disintegration of one particle into two (or two into one) does not happen anymore, and only the one involving four particles takes place. Pictorially, only graphs of a certain shape, become relevant. Or, it could happen that, at very high energies, the mass of the particles becomes zero,

i.e. the particles become lighter and lighter, eventually propagating just like photons do. The general lesson, beside technicalities and specific cases, is that for any given physical system it is crucial to understand exactly how the quantities of interest diverge, because in the details of such divergence lies important information about the true physics of the system considered. The infinities in our models should be tamed, explored in depth, and listened to.

This is what Matteo Smerlak and Valentin Bonzom have done in the work presented at the seminar, for some models of quantum space that are currently at the center of attention of the quantum gravity community. These are so-called spin foam models, in which quantum space is described in terms of spin networks (graphs whose links are assigned discrete numbers, spins, representing elementary geometric data) or equivalently in terms of collections of triangles glued to one another along edges, and whose geometry is specified by the length of all such edges. Spin foam models are then strictly related to both loop quantum gravity, whose dynamical aspects they seek to define, and to other approaches to quantum gravity like simplicial gravity. These models, very much like models for the dynamics of ordinary quantum particles, aim to compute (among other things) the probability to measure a given configuration of quantum space, represented again as a bunch of triangles glued together or as a spin network graph. Notice that here a ‘configuration of quantum space’ means both a given shape of space (it could be a sphere, a doughnut, or any other fancier shape), and a given geometry (it could be a very big or a very small sphere, a sphere with some bumps here and there, etc). One could also consider computing the probability of a transition from a given configuration of quantum space to a different one.

More precisely, the models that Bonzom and Smerlak studied are simplified ones (with respect to those that aim at describing our 4-dimensional space-time) in which the dynamics is such that, whatever the shape and geometry of space one is considering, during its evolution, should one measure the curvature of the same space at any given location, one would find zero. In other words these models only consider flat space-times. This is of course a drastic simplification but not such that the resulting models become uninteresting. On the contrary, these flat models are not only perfectly fine to describe quantum gravity in the case in which space has only two dimensions, rather than three, but are also the very basis for constructing realistic models

for 3-dimensional quantum space, i.e. 4-dimensional quantum spacetime. As a consequence, these models, together with the more realistic ones, have been a focus of attention of the community of quantum gravity researchers.

What is the problem being discussed, then? As you can imagine, the usual one: when one tries to compute the mentioned probability for a certain evolution of quantum space, even within these simplified models, the answer one gets is the ever-present, but by now only slightly intimidating, infinity. How does the calculation look like? It looks very similar to the calculation for the probability of a given process of evolution of particles in quantum field theory. Consider the case in which space is 2-dimensional and therefore space-time is 3-dimensional. Suppose you want to consider the probability of measuring first n triangles glued along one another to form, say, a 2-dimensional sphere (the surface of a soccer ball) of a given size, and then m triangles now glued to form, say, the surface of a doughnut. Now take a collection of an arbitrary number of triangles and glue them to one another along edges to form a 3-dimensional object of your choice, just like kids stick LEGO blocks to one another to form a house or a car or some spaceship (you see, science is in many ways the development of children’s curiosity by other means). It could be as simple as a soccer ball, in principle, or something extremely complicated, with holes, multiple connections, anything). There is only one condition on the 3-dimensional object you can build: its surface should be formed, in the example we are considering here, by two disconnected parts: one in the shape of a sphere made of n triangles, and one in the shape of the surface of a doughnut made of m triangles. This condition would for example prevent you from building a soccer ball, which you could do, instead, if one wanted to consider only the probability of measuring n triangles forming a sphere, and no doughnut was involved. Too bad. We’ll be lazy in this example and consider a doughnut but no soccer ball. Anyway, apart from this, you can do anything.

Let us pause for a second to clarify what it means for a space to have a given shape. Consider a point on the sphere and take a path on it that starts at the given point and after a while comes back to the same point, forming a loop. Now you see that there is no problem in taking this loop to become smaller and smaller, eventually shrinking to a point and disappearing. Now do the same operation on the surface of a doughnut. You will see that certain loops can again be shrunk to a point and made disappear, while others cannot. These are the ones that go around the hole of the doughnut. So you see that operations like these can help us determining the shape of our space. The same holds true for 3d spaces, in fact, you only need many more types of operations of this type. Ok, now you finish building your 3-dimensional object made of as many triangles as you want. Just as the triangles in the boundary of the 3d object, those forming the sphere and the doughnut, also those forming the 3d object come with numbers associated to the edges of the triangles. These numbers, as said, specify the geometry of all the triangles, and therefore of the sphere, of the doughnut and of the 3d object that has them on its boundary.

A collection of glued triangles forming a sphere (left) and a doughnut (right); the interior 3d space can alsobe built out of glued triangles having the given shape on the boundary: for the first object, the interior is a ball; for the second it forms what is called a solid torus. Pictures from http://www.hakenberg.de/

The theory (the spin foam model you are studying) should give you a probability for the process considered. If the triangles forming the sphere represent how quantum space was at first, and the triangles forming the doughnut how it is in the end, the 3d object chosen represent a possible quantum space-time. In the analogy with the particle process described earlier, the n triangles forming a sphere correspond to the initial n particles, the m triangles forming the doughnut correspond to the final m particles, and the triangulated 3d object is the analogue of a possible ‘interaction process’, a possible history of triangles being created/destroyed, forming different shapes and changing their size; this size is encoded in their edge lengths, which is the analogue of the energies of the particles. The spin foam model now gives you the probability for the process in the form of a sum over the probabilities for all possible assignments of lengths to the edges of the 3d object, each probability function enforcing that the 3d object is flat (it gives probability equal to zero if the 3d object is not flat). As anticipated, the above calculation gives the usual nonsensical infinity as a result. But again, we now know that we should get past the disappointment, and look more carefully at what this infinity hides. So what one does is again to imagine that there is a maximal length that edges of triangles can have, call it Emax, define the truncated amplitude and study carefully exactly how it behaves when Emax grows, when it is allowed to become larger and larger.

In a sense, in this case, what is hidden inside this infinity is the whole complexity of a 3d space, at least of a flat one. What one finds is that hidden in this infinity, and carefully revealed by the scaling of the above amplitude with Emax, is all the information about the shape of the 3d object, i.e. of the possible 3d spacetime considered, and all the information about how this 3d spacetime has been constructed out of triangles. That’s lots of information!

Bonzom and Smerlak, in the work described at the seminar, have gone a very long way toward unraveling all this information, dwelling deeper and deeper into the hidden secrets of this particular infinity. Their work is developed in a series of papers, in which they offer a very elegant mathematical formulation of the problem and a new approach toward its solution, progressively sharpening their results and improving our understanding of these specific spin foam models for quantum gravity, of the way they depend on the shape and on the specific construction of each 3d spacetime, and of what shape and construction give, in some sense, the ‘bigger’infinity. Their work represented a very important contribution to an area of research that is growing fast and in which many other results, from other groups around the world, had already been obtained and are still being obtained nowadays.

There is even more. The analogy with particle processes in quantum field theory can be made sharper, and one can indeed study peculiar types of field theories, called ‘group field theories’, such that the above amplitude is indeed generated by the theory and assigned to the specific process, as in spin foam models, and at the same time all possible processes are taken into account, as in standard quantum field theories for particles.

This change of framework, embedding the spin foam model into a field theory language, does

not change much the problem of the divergence of the sum over the edge lengths, nor its infinite result.

And it does not change the information about the shape of space encoded in this infinity. However, it changes the perspective by which we look at this infinity and at its hidden secrets. In fact, in this new context, space and space-time are truly dynamical, all possible spaces and space-times have to be considered together and on equal footing and compete in their contribution to the total probability for a certain transition from one configuration of quantum space to another. We cannot just choose one given shape, do the calculation and be content with it (once we dealt with the infinity resulting from doing the calculation naively). The possible space-times we have to consider, moreover, include really weird ones, with billions of holes and strange connections from one regions to another, and 3d objects that do not really look like sensible space-times at all, and so on. We have to take them all into account, in this framework. This is of course an additional technical complication. However, it is also a fantastic opportunity. In fact, it offers us the chance to ask and possibly answer a very interesting question: why is our space-time, at least at our macroscopic scale, the way it is? Why does it look so regular, so simple in its shape, actually as simple as a sphere is? Try! we can consider an imaginary loop located anywhere in space and shrink it to a point making it disappear without any trouble, right? If the dynamics of quantum space is governed by a model (spin foam or group field theory) like the ones described, this is not obvious at all, but something to explain. Processes that look as nice as our macroscopic space-time are but a really tiny minority among the zillions of possible space-times that enter the sum we discussed, among all the possible processes that have to be considered in the above calculations. So, why should they ‘dominate’ and end up being the truly important ones, those that best approximate our macroscopic space-time? Why and how do they ‘emerge’ from the others and originate, from this quantum mess, the nice space-time we inhabit, in a classical, continuum approximation? What is the true quantum origin of space-time, in both its shape and geometry? The way the amplitudes grow with the increase of Emax is where the answer to these fascinating questions lies.

The answer, once more, is hidden in the very same infinity that Bonzom, Smerlak, and their many quantum gravity colleagues around the world are so bravely taming, studying, and, step by step, understanding.

Matteo Smerlak, ENS Lyon

Title: Bubble divergences in state-sum models

PDF of the slides (180k)

Audio [.wav 25MB], Audio [.aif 5MB].

Physicists tend to dislike infinities. In particular, they take it very badly when the result of a calculation they are doing turns out to be not some number that they could compare with experiments, but infinite. No energy or distance, no velocity or density, nothing in the world around us has infinity as its measured value. Most times, such infinities signal that we have not been smart enough in dealing with the physical system we are considering, that we have missed some key ingredient in its description, or used the wrong mathematical language in describing it. And we do not like to be reminded of our own lack of cleverness.

At the same time, and as a confirmation of the above, much important progress in theoretical physics has come out of a successful intellectual fight with infinities. Examples abound, but here is a historic one. Consider a large 3-dimensional hollow spherical object whose inside is made of some opaque material (thus absorbing almost all the light hitting it), and assume that it is filled with light (electromagnetic radiation) maintained at constant temperature. This object is named a black body. Imagine now that the object has a small hole from which a limited amount of light can exit. If one computes the total energy (i.e. considering all possible frequencies) of the radiation exiting from the hole, at a given temperature and at any given time, using the well-established laws of classical electromagnetism and classical statistical mechanics, one finds that it is infinite. Roughly, this calculation looks as follows: you have to sum all the contributions to the total energy of the radiation emitted (at any given time), coming from all the infinite modes of oscillation of the radiation, at the temperature T. Since there are infinite modes, the sum diverges. Notice that the same calculation can be performed by first imagining that there exists a maximum possible mode of oscillation, and then studying what

happens when this supposed maximum is allowed to grow indefinitely. After the first step, the calculation gives a finite result, but the original divergence is obtained again after the second step. In any case, this sum gives a divergent result: infinity! However, this two-step procedure allows to understand better how the quantity of interest diverges.

Beside being a theoretical absurdity, this is simply false on experimental grounds since such radiating objects can be realized rather easily in a laboratory. This represented a big crisis in classical physics at the end of the 19th century. The solution came from Max Planck with the hypothesis that light is in reality constituted by discrete quanta (akin to matter particles), later named photons, with a consequently different formula for the emitted radiation from the hole (more precisely, for the individual contributions). This hypothesis, initially proposed for completely different motivations, not only solved the paradox of the infinite energy, but spurred the quantum mechanics revolution which led (after the work of Bohr, Einstein, Heisenberg, Schroedinger, and many others) to the modern understanding of light, atoms and all fundamental forces (except gravity).

We see, then, that the need to understand what was really lying inside an infinity, the need to confront it, led to an important jump forward in our understanding of Nature (in this example, of light), and to a revision of our most cherished assumptions about it. The infinity was telling us just that. Interestingly, a similar theoretical phenomenon seems now to suggest that another, maybe even greater jump forward is needed and a new understanding of gravity and of spacetime itself.

An object that is theoretically very close to a perfect black body is a black hole. Our current theory of matter, quantum field theory, in conjunction with our current theory of gravity, General Relativity, predicts that such black hole will emit thermal radiation at a constant temperature inversely proportional to the mass of the black hole. This is called Hawking radiation. This result, together with the description of black holes provided by general relativity, also suggest that black holes have an entropy associated to them, measuring the number of their intrinsic degrees of freedom. Because a black hole is nothing but a particular configuration of space, this entropy is then a measure of the intrinsic degrees of freedom of (a region of) space itself! However, first of all we have no real clue what these intrinsic degrees of freedom are; second, if the picture of space provided by general relativity is correct, their number and their corresponding entropy is infinite!

This fact, together with a large number of other results and conceptual puzzles, prompted a large part of the theoretical physics community to look for a better theory of space (and time), possibly based on quantum mechanics (taking on board the experience from history): a quantum theory of space-time, a quantum theory of gravity.

It should not be understood that the transition from classical to quantum mechanics led us away from the problem of infinities in physics. On the contrary, our best theories of matter and of fundamental forces, quantum field theories, are full of infinities and divergent quantities. What we have learned, however, from quantum field theories, is exactly how to deal with such infinities in rather general terms, what to expect, and what to do when such infinities present themselves. In particular, we have learned another crucial lesson about nature: physical phenomena look very different at different energy and distance scales, i.e. if we look at them very closely or if they involve higher and higher energies. The methods by which we deal with this scale dependence go under the name of renormalization group, now a crucial ingredient of all theories of particles and materials, both microscopic and macroscopic. How this scale dependence is realized in practice depends of course on the specific physical system considered.

Let us consider a simple example. Consider the dynamics of a hypothetical particle with mass m and no spin; assume that what can happen to this particle during its evolution is only one of the following two possibilities: it can either disintegrate into two new particles of the same type or disintegrate into three particles of the same type. Also, assume that the inverse processes are also allowed (that is, two particles can disappear and give rise to a single new one, and the same can do three particles). So there are two possible ‘interactions’ that this type of particle can undergo, two possible fundamental processes that can happen to it. To each of them, we associate a parameter, called a ‘coupling constant’ that indicates how strong each possible interaction process is (compared with each other and with other possible processes due for example to the interaction of the particles with gravity or with light etc), one for the process involving three particles, and one for the one involving four particles (this is counting incoming and outgoing particles). Now, the basic object that a quantum field theory allows us to compute is the probability (amplitude) that, if I first see a number n of particles at a certain time, at a later time I will instead see m particles, with m different from n (because some particle will have disintegrated and other will have been created). All the other quantities of physical interest can be obtained using these probabilities.

Moreover, the theory tells me exactly how this probability should be computed. It goes roughly as follows. First, I have to consider all possible processes leading from n particles to m particles, including those involving an infinite number of elementary creation/disintegration processes. These can be represented by graphs (called Feynman graphs) in which each vertex represents a possible elementary process (see the figure for an example of such process, made out of interactions involving three particles only, with associated graph,).

A graph describing a sequence of 3-valent elementary interactions for a point particle, with 2 particles measured both at the initial and at the final time (to be read from left to right)

Second, each of these processes should be assigned a probability (amplitude), that is, a function of the mass of the particle considered and of the ‘coupling constants’. Third, this amplitude is in turn a function of the energies of each particle involved in any given process.(and corresponding to a single line in the graph representing the process), and this energy can be anything, from zero to infinity. The theory tells me what form the probability amplitude has. Now the total probability for the measurement of n particle first and m particles later is computed by summing over all processes/graphs (including those composed of infinite elementary processes) and all the energies of particles involved in them, weighted by the probability amplitudes.

Now, guess what? The above calculation typically gives the always feared result: infinity. Basically, everything that could go wrong, actually goes wrong, as in Murphy’s law. Not only the sum over all the graphs/processes gives a divergent answer, but also the intermediate sum over energies diverges. However, as we anticipated, we now know how to deal with this kind of infinities, we are not scared anymore and, actually, we have learnt what they mean, physically. The problem mainly arises when we consider higher and higher energies for the particles involved in the process. For simplicity imagine that all the particles have the same energy E, and assume this can take any value from 0 to a maximum value Emax. Just like in the black body example, the existence of the maximum implies that the sum over energies is a finite number, so everything up to here goes fine. However, when we let the maximal energy becomes infinite, typically the same quantity becomes infinite.

We have done something wrong; let’s face it: there is something we have not understood of the physics of the system (simple particles as they may be). It could be that, as in the case of the blackbody radiation, we are missing something fundamental about the nature of these particles, and we have to change the whole probability amplitude. Maybe other type of particles have to be considered as created out of the initial ones. All this could be. However, what quantum field theory has taught us is that, before considering these more drastic possibilities, one should try to re-write the above calculation by considering coupling constants and mass, that themselves depend on the scale Emax, and then compute again the probability amplitude, but now using these ‘scale dependent’constants, and check if one can now consider the case of Emax growing up to infinity, i.e. consider arbitrary energies for the particles involved in the process. If this can be done, i.e. if one can find coupling constants dependent on the energies such that now the result of sending Emax to infinity, i.e. considering larger and larger energies, is a finite, sensible probability then there no need for further modifications of the theory, and the physical system considered, i.e. the (system of) particles, is under control.

What does all this teach us? It teaches us that the type of interactions that the system can

undergo and their relative strengths depend on the scale at which we look at the system, i.e. on what energy is involved in any process the system is experiencing. For example, it could happen that when Emax becomes higher and higher, the coupling constant as a function of Emax becomes zero. This would mean that, at very high energies, the process of disintegration of one particle into two (or two into one) does not happen anymore, and only the one involving four particles takes place. Pictorially, only graphs of a certain shape, become relevant. Or, it could happen that, at very high energies, the mass of the particles becomes zero,

i.e. the particles become lighter and lighter, eventually propagating just like photons do. The general lesson, beside technicalities and specific cases, is that for any given physical system it is crucial to understand exactly how the quantities of interest diverge, because in the details of such divergence lies important information about the true physics of the system considered. The infinities in our models should be tamed, explored in depth, and listened to.

This is what Matteo Smerlak and Valentin Bonzom have done in the work presented at the seminar, for some models of quantum space that are currently at the center of attention of the quantum gravity community. These are so-called spin foam models, in which quantum space is described in terms of spin networks (graphs whose links are assigned discrete numbers, spins, representing elementary geometric data) or equivalently in terms of collections of triangles glued to one another along edges, and whose geometry is specified by the length of all such edges. Spin foam models are then strictly related to both loop quantum gravity, whose dynamical aspects they seek to define, and to other approaches to quantum gravity like simplicial gravity. These models, very much like models for the dynamics of ordinary quantum particles, aim to compute (among other things) the probability to measure a given configuration of quantum space, represented again as a bunch of triangles glued together or as a spin network graph. Notice that here a ‘configuration of quantum space’ means both a given shape of space (it could be a sphere, a doughnut, or any other fancier shape), and a given geometry (it could be a very big or a very small sphere, a sphere with some bumps here and there, etc). One could also consider computing the probability of a transition from a given configuration of quantum space to a different one.

More precisely, the models that Bonzom and Smerlak studied are simplified ones (with respect to those that aim at describing our 4-dimensional space-time) in which the dynamics is such that, whatever the shape and geometry of space one is considering, during its evolution, should one measure the curvature of the same space at any given location, one would find zero. In other words these models only consider flat space-times. This is of course a drastic simplification but not such that the resulting models become uninteresting. On the contrary, these flat models are not only perfectly fine to describe quantum gravity in the case in which space has only two dimensions, rather than three, but are also the very basis for constructing realistic models

for 3-dimensional quantum space, i.e. 4-dimensional quantum spacetime. As a consequence, these models, together with the more realistic ones, have been a focus of attention of the community of quantum gravity researchers.

What is the problem being discussed, then? As you can imagine, the usual one: when one tries to compute the mentioned probability for a certain evolution of quantum space, even within these simplified models, the answer one gets is the ever-present, but by now only slightly intimidating, infinity. How does the calculation look like? It looks very similar to the calculation for the probability of a given process of evolution of particles in quantum field theory. Consider the case in which space is 2-dimensional and therefore space-time is 3-dimensional. Suppose you want to consider the probability of measuring first n triangles glued along one another to form, say, a 2-dimensional sphere (the surface of a soccer ball) of a given size, and then m triangles now glued to form, say, the surface of a doughnut. Now take a collection of an arbitrary number of triangles and glue them to one another along edges to form a 3-dimensional object of your choice, just like kids stick LEGO blocks to one another to form a house or a car or some spaceship (you see, science is in many ways the development of children’s curiosity by other means). It could be as simple as a soccer ball, in principle, or something extremely complicated, with holes, multiple connections, anything). There is only one condition on the 3-dimensional object you can build: its surface should be formed, in the example we are considering here, by two disconnected parts: one in the shape of a sphere made of n triangles, and one in the shape of the surface of a doughnut made of m triangles. This condition would for example prevent you from building a soccer ball, which you could do, instead, if one wanted to consider only the probability of measuring n triangles forming a sphere, and no doughnut was involved. Too bad. We’ll be lazy in this example and consider a doughnut but no soccer ball. Anyway, apart from this, you can do anything.

Let us pause for a second to clarify what it means for a space to have a given shape. Consider a point on the sphere and take a path on it that starts at the given point and after a while comes back to the same point, forming a loop. Now you see that there is no problem in taking this loop to become smaller and smaller, eventually shrinking to a point and disappearing. Now do the same operation on the surface of a doughnut. You will see that certain loops can again be shrunk to a point and made disappear, while others cannot. These are the ones that go around the hole of the doughnut. So you see that operations like these can help us determining the shape of our space. The same holds true for 3d spaces, in fact, you only need many more types of operations of this type. Ok, now you finish building your 3-dimensional object made of as many triangles as you want. Just as the triangles in the boundary of the 3d object, those forming the sphere and the doughnut, also those forming the 3d object come with numbers associated to the edges of the triangles. These numbers, as said, specify the geometry of all the triangles, and therefore of the sphere, of the doughnut and of the 3d object that has them on its boundary.

A collection of glued triangles forming a sphere (left) and a doughnut (right); the interior 3d space can alsobe built out of glued triangles having the given shape on the boundary: for the first object, the interior is a ball; for the second it forms what is called a solid torus. Pictures from http://www.hakenberg.de/

The theory (the spin foam model you are studying) should give you a probability for the process considered. If the triangles forming the sphere represent how quantum space was at first, and the triangles forming the doughnut how it is in the end, the 3d object chosen represent a possible quantum space-time. In the analogy with the particle process described earlier, the n triangles forming a sphere correspond to the initial n particles, the m triangles forming the doughnut correspond to the final m particles, and the triangulated 3d object is the analogue of a possible ‘interaction process’, a possible history of triangles being created/destroyed, forming different shapes and changing their size; this size is encoded in their edge lengths, which is the analogue of the energies of the particles. The spin foam model now gives you the probability for the process in the form of a sum over the probabilities for all possible assignments of lengths to the edges of the 3d object, each probability function enforcing that the 3d object is flat (it gives probability equal to zero if the 3d object is not flat). As anticipated, the above calculation gives the usual nonsensical infinity as a result. But again, we now know that we should get past the disappointment, and look more carefully at what this infinity hides. So what one does is again to imagine that there is a maximal length that edges of triangles can have, call it Emax, define the truncated amplitude and study carefully exactly how it behaves when Emax grows, when it is allowed to become larger and larger.

In a sense, in this case, what is hidden inside this infinity is the whole complexity of a 3d space, at least of a flat one. What one finds is that hidden in this infinity, and carefully revealed by the scaling of the above amplitude with Emax, is all the information about the shape of the 3d object, i.e. of the possible 3d spacetime considered, and all the information about how this 3d spacetime has been constructed out of triangles. That’s lots of information!